Lees ons laatste nieuws

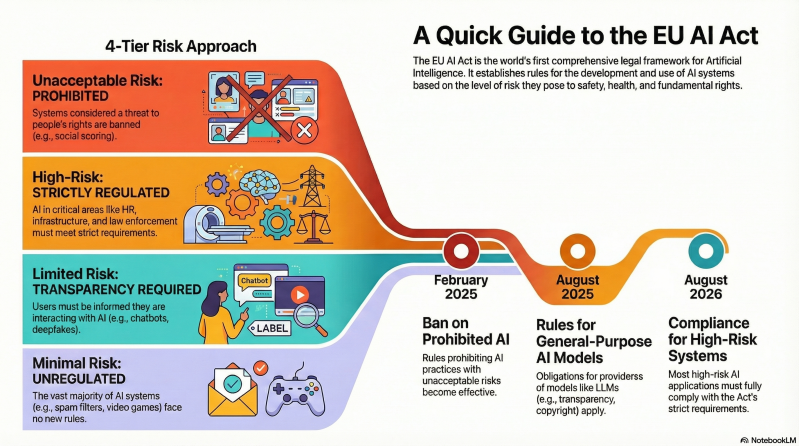

The EU AI Act is the world's first comprehensive law designed to regulate the development and use of Artificial Intelligence (AI). The goal? To ensure safe and trustworthy AI in Europe, while respecting our fundamental rights. At the heart of this law is a risk-based approach. The rules you must follow depend on the potential harm an AI system could cause.

The 4 Risk Levels in a nutshell

Unacceptable Risk (Prohibited since February 2025): This type of AI is completely banned. Examples include systems that manipulate behaviour harmfully (e.g., AI encouraging vulnerable groups to engage in dangerous behaviour) or unlawful ‘social scoring’.

High Risk (Strict Rules, from August 2026): These are systems with a significant impact on our safety, health, or fundamental rights. Example: An AI tool used to select CVs for job applications (HR decisions) or an AI system assessing access to credit or social benefits. Companies deploying these systems must comply with very strict requirements regarding data quality and documentation.

Limited Risk (Transparency Obligation): If you use AI that interacts with people, you must be open and honest about it. Example: A customer service chatbot must clearly indicate that you are talking to an AI. Or, if you use Generative AI (such as a tool to create blog images), you must disclose that the content was generated by AI.

Minimal Risk: Most common AI systems fall into this category (think of an email spam filter or Netflix recommendations). No specific rules apply here.

What must you do now to comply with these guidelines?

Waiting is not an option. Fines can reach up to 7% of global turnover. Start today with these steps:

Inventory and Classify: Map out which AI systems your organisation uses. Classify them according to the four risk levels and stop using prohibited systems immediately.

Focus on Data Quality: For High-Risk AI, the quality of your data is crucial. Ensure the datasets used to train AI are representative, relevant, and error-free to prevent unintended discrimination (bias).

Invest in AI Literacy: Ensure your staff—from developers to managers—understand the technical, ethical, and social risks of AI. This is essential for effective human oversight of High-Risk systems.

Document Everything: Keep detailed logs and technical documentation to demonstrate compliance with the law.

By acting proactively now, you not only minimise legal risks but also position your organisation as a trustworthy and ethical player in the fast-growing AI market.